floating-point

what

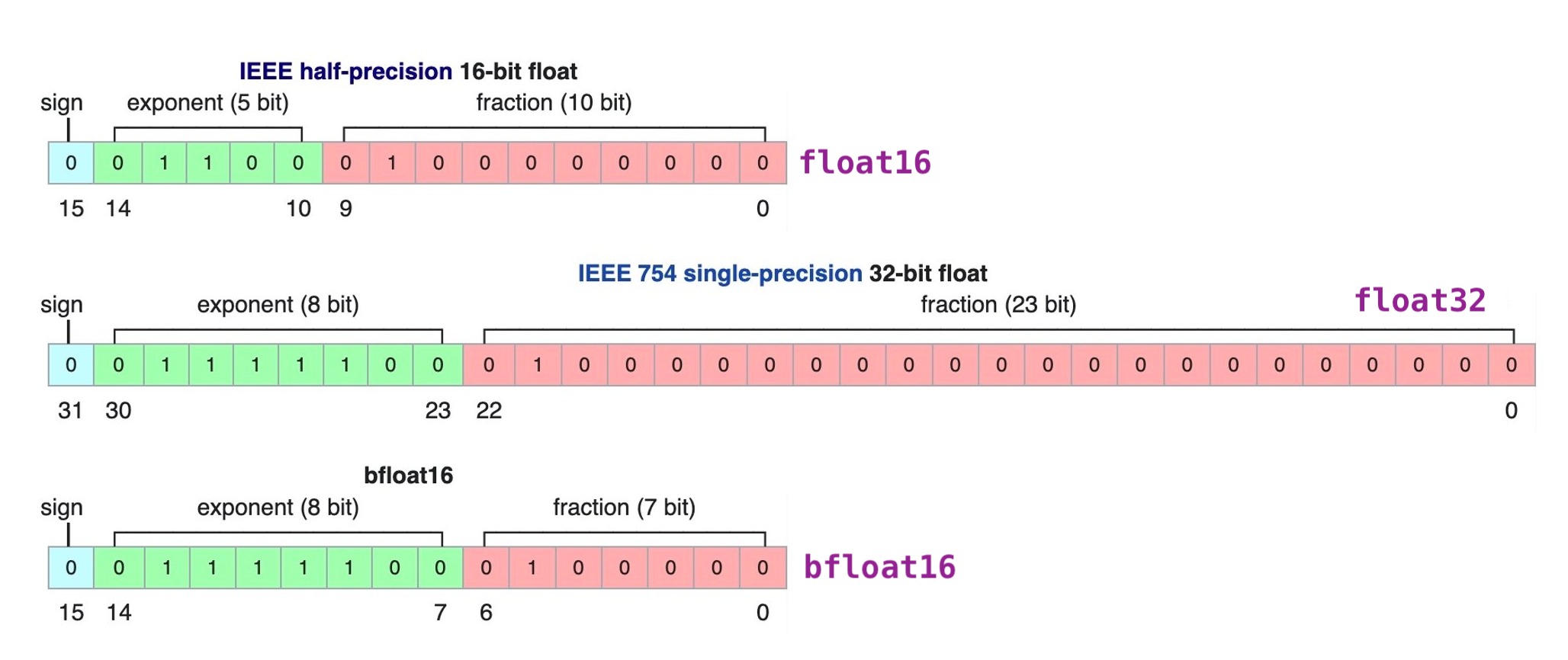

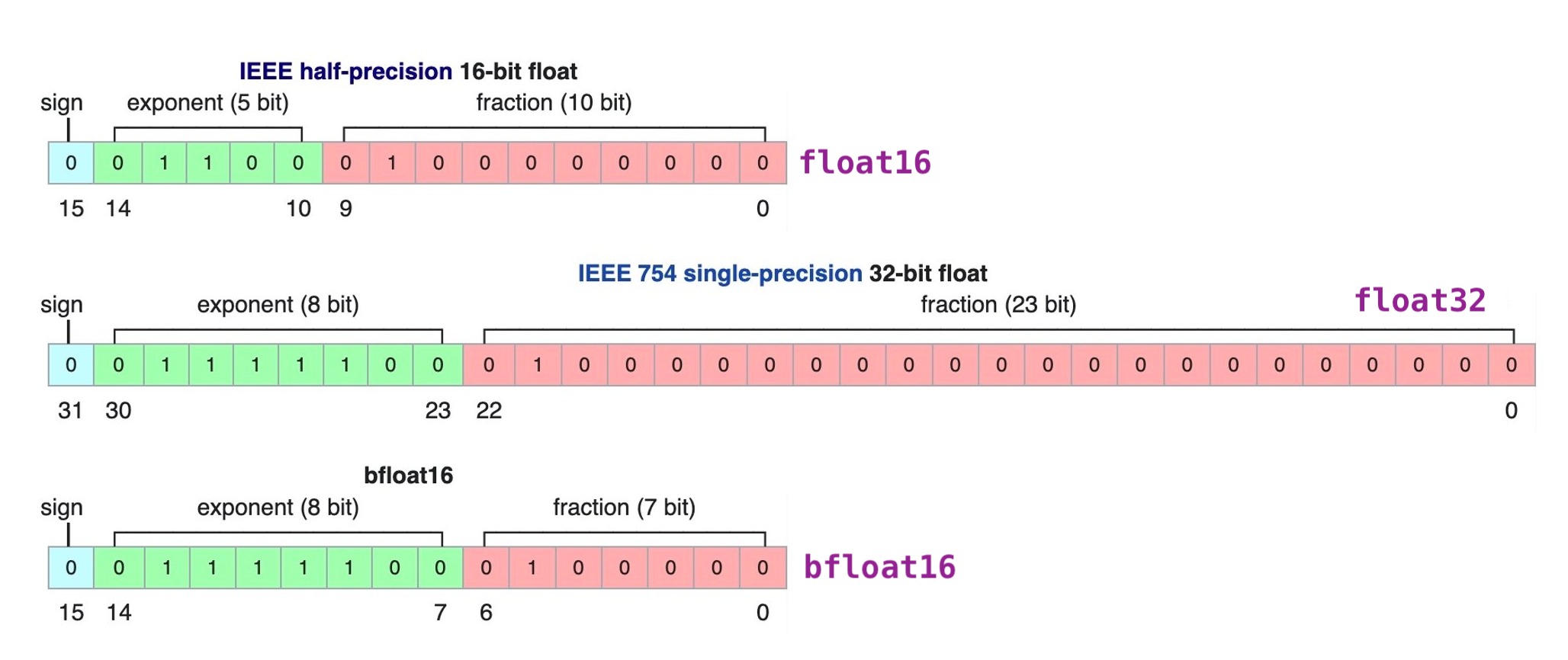

- a slightly different floating-point representation than FP16 that shifts around a few of the bits between the mantissa and the exponent

- uses 8 bits for the exponent (same as FP32), FP16 uses 5 1

why use BF16 ?

- some layers don’t need to have high precision such as Convolution or Linear 2

- reduces memory footprint during training and for storing

- less prone to overflow and underflow compares to FP16 because the exponent is the same as FP32

- Converting from FP32 to BF16 is simply dropping the left bits, which is the same as perform a rounding

sources