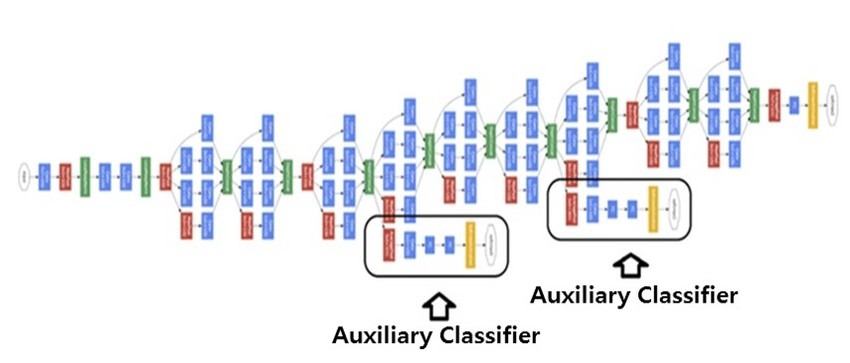

- CNN models have auxiliary loss at different depths of the model

- original motivation was to reduce vanishing gradient

- these losses can be the same

- which means the model can perform the same task at different depth, albeit with different performance level

- Can we apply the same idea for language models ? question

- different depths of the model can perform the same task