what

-

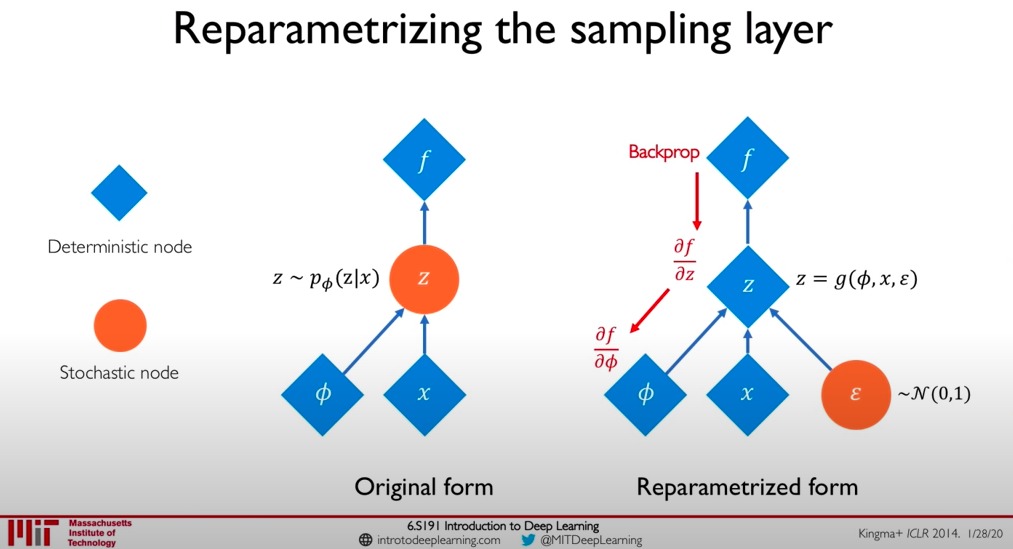

A method for computing/estimating gradients of random variables

-

Most widely refers to the case of sampling from a normal distribution from a standard normal distribution using the following formula:

where is drawn from .

-

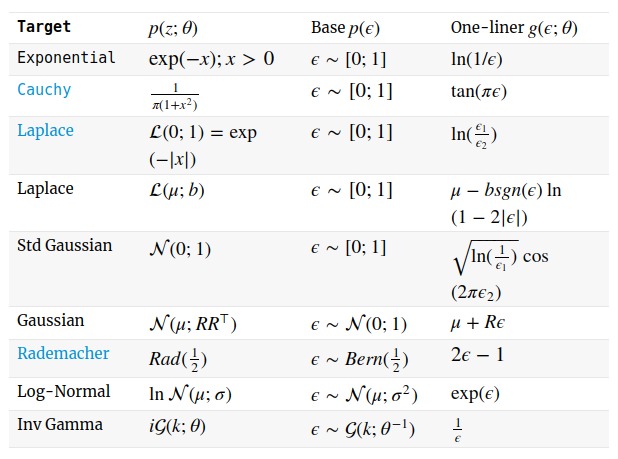

Reparameterization is a method of generating non-uniform random numbers by transforming some base distribution, , to a desired distribution, . The transformation from the base to the desired distribution can be written as , as follows 3:

why

-

We use the reparameterization trick to express a gradient of an expectation as an expectation of a gradient . Provided is differentiable - something Kingma4 emphasizes - then we can then use Monte Carlo methods to estimate . 5

-

The issue is not that we cannot backprop through a “random node” in any technical sense. Rather, backproping would not compute an estimate of the derivative (because is the gradient w.r.t. over the expectation w.r.t. ). Without the reparameterization trick, we have no guarantee that sampling large numbers of z will help converge to the right estimate of . 5

-

The “trick” part of the reparameterization trick is that you make the randomness an input to your model instead of something that happens “inside” it, which means you never need to differentiate with respect to sampling (which you can’t do). 6

notes

- Some commonly used reparameterizations

(source 1, 7)

(source 1, 7) - multiple groups realized this fact at the same time around 2013: Rezende et al., Titsias & Lazaro-Gredilla., Kingma & Welling4. (All three papers were published in 2014. However, it’s usually the VAE paper that gets all the credit, 7

(source

(source